Artificial intelligence has made giant steps in the recent past; models developed in the last few years, as led by ChatGPT and Google AI, are able to understand and generate human-like text, making it useful for applications such as conversational agents and sophisticated data analysis tools. However, the magic behind the scenes involved in training and deployment these AI models, require huge computational power. The true heroes driving this AI transformation are the massive data centers housing the infrastructure to make this AI possible.

AI training scale: why massive data centers are required

Training AI models like ChatGPT or Google AI is not an easy task. It requires the processing of vast amounts of data, text, images, videos for it to learn and make accurate predictions. This training process involves the running of complex algorithms across vast datasets, requiring a huge amount of computational power. This is where data centers come into play.

Computational Power Needs

AI training requires such a high degree of processing power that has to be supplied with specialised hardware in the form of GPUs and TPUs. The processing capabilities of these processors are measured in thousands of operations executed simultaneously, and it is precisely this type of processing that is needed to train large models in AI. However, to accommodate the sheer scale of these operations, thousands of such processors need to work in parallel, requiring the kind of expansive infrastructure found only in large data centers.

Data Storage Requirements

Beyond just raw computational power, storage demands are also extremely high for AI training. The sizes of training datasets are usually measured in ranges from petabytes to exabytes, which requires efficient storage and access with associated processing. Advanced storage solutions within large-scale data centers ensure that this is done and that data will always be readily available to enable quick learning and adaptation for the AI models.

The Role of High-Performance Computing in AI Development

These huge data centers have infrastructure designed for high-performance computing, which is the backbone of AI development.

AI Infrastructure

An AI training environment contains thousands of GPUs and TPUs arranged in clusters to ensure maximum parallel processing. This allows several models to train at once, or multiple parts of a larger model in parallel, drastically cutting down the time needed to hit optimal performance.

Parallel Processing

Parallel processing is one of the cornerstones of AI training. Rather than processing data through one processor, or just in a data center, AI models can process even more data in less time by distributing the workload across a host of processors, and data centers improve the speed and accuracy of the training process. The ability to do so is critical in the refining models like ChatGPT, having to understand and generate responses like a human on a vast array of subjects.

Effect on Speed and Training Quality of Model

The faster an AI model is trained, the more rapid it can be deployed and fine-tuning. High-performance data centers support the overall quality of the models by speeding up the training process. If there is an increase in computational power, then AI models can be trained on datasets of higher magnitude, yielding more accurate and reliable results.

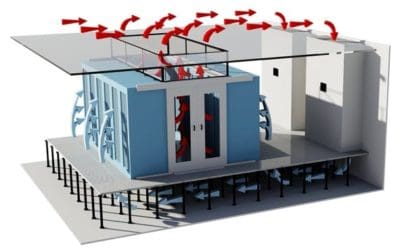

Data Centre Cooling and Energy Management

Training in AI is incredibly computationally intensive. The large demands on energy consumption are matched by large demands on heat generation, which pose their own special problems to data center management.

Energy Demands

Thousands of non-stop GPUs and TPUs consume enormous amounts of energy. This means that data centers that accommodate the AI infrastructure should be designed with energy use in mind, not just the power needed to run the hardware but the energy used to cool it down.

Solutions for Cooling

Cooling is a main necessity in data center operations, more so when it involves high-performance computing environments. Advanced cooling technologies come into play with liquid cooling and air-cooled systems to ensure optimal temperatures for operation. Unless the cooling is effective, heat off the processors could cause hardware failures, which would mean a long operational downtime.

Slight Allusion to Airflow Management

Airflow management in these environments is of equal importance. This is where products like EziBlank’s blanking panels step in: a very critical aspect of managing the air stream through the server racks in making sure that hot air is effectively evacuated and cool air is channeled to where it is needed most. This not only brings about efficiency in cooling but also helps reduce overall energy consumption.

Data Centers form the backbone of AI Model Deployment.

Once AI models are trained, deployment becomes necessary, in a manner that will have them run effectively and respond to queries in real time; here again, data centers continue to play a huge role.

From Training to Deployment

After training the models such as ChatGPT and Google AI, are deployed at scale across different platforms to process real-time data and reply instantaneously. In that regards, deployment requires access to uninterrupted computing power and data, which is enabled by the same data centers handling the processing during training.

Real-Time Processing Needs

Low latency and high connectivity are important if AI models are to work effectively in real-time applications. Data centers make available the infrastructure so that AI models can generate quick and accurate responses, be it for a search engine query or a conversational AI system.

Scalability and Flexibility

The never-stopping improvement of AI models poses ever higher demands on data centers. The scaling performed by these facilities ensures that the growing workload, along with the subsequent deployment of more complex AI models, is done without a hitch. This also means flexibility: innovative technologies in AI are evolving at fast rates, and so it is necessary for data centers to rapidly keep up with new hardware and software requirements.

Future of AI and Data Centers

With AI still growing, the relationship between AI and data centers can only deepen, therefore driving innovations in both fields.

Growth of AI Models

AI models are getting large and complex, hence needing more computational power and storage. This already creates an indication that data centers will have to continue their expansion and new evolution in order to meet these growing demands.

Sustainability Challenges

With great power comes great responsibility—especially when it comes to sustainability. Indeed, model energy demands are huge, and there’s growing pressure on data centers to become more sustainable by integrating renewable energy sources into their operations, increasing energy efficiency, and finding new cooling technologies that could help lighten their environmental footprint.

The Role of AI in Optimizing Data Centers

Interestingly, AI itself is contributing to data center operation optimisation. AI-driven systems will monitor and manage energy use, predict maintenance needs, and even optimise airflow management so that data centers run as efficiently as possible with minimal potential downtime at minimal cost.

The massive data centers are the unsung heroes backing the success stories of AI models such as ChatGPT and Google AI. In that respect, data centers provide both the computational power and storage capacity, together with energy management, to train and deploy such sophisticated technologies. The role of data centers will only continue to grow with the advancement of AI, further driving innovation in both areas. Next time you’re interacting with an AI, remember, it’s not just algorithms that make this possible; it’s the incredible infrastructure of data centers that power it all.