Artificial intelligence is growing at an incredibly rapid pace, changing the face of industries worldwide. From large language models like ChatGPT or advanced computer vision systems, the range of capabilities AI can possess is increasing at a really rapid rate. These advancements do not happen in a vacuum; they call for immense computational power and sophisticated infrastructure. At the core of this revolution are NVIDIA GPUs that are powering the next generation of AI data centers required to make these technological leaps possible.

Need for AI-Optimised Data Centers

Today, models are more complex and powerful, with an exponential increase in computational needs for training and deployment. Traditional data centers, whose architecture is designed for general-purpose computing, are unable to keep up with these demands. This has shifted the workload requirement onto specialised data centers that have high processing power and data throughput required by modern AI models.

Why NVIDIA GPUs?

The chips from NVIDIA, have become the gold standard for training and inference in AI and are simply unparalleled when it comes to running these algorithms. They allow thousands of calculations to occur simultaneously since their architecture is tailor-made for parallel processing—an attribute required by AI algorithms. That is a critical capability if large-scale AI models are to be trained efficiently. Only NVIDIA GPUs can scale such performance so data centers can meet the rising demands of increasingly complex AI workloads.

How NVIDIA GPUs Are Shaping Data Center Architecture

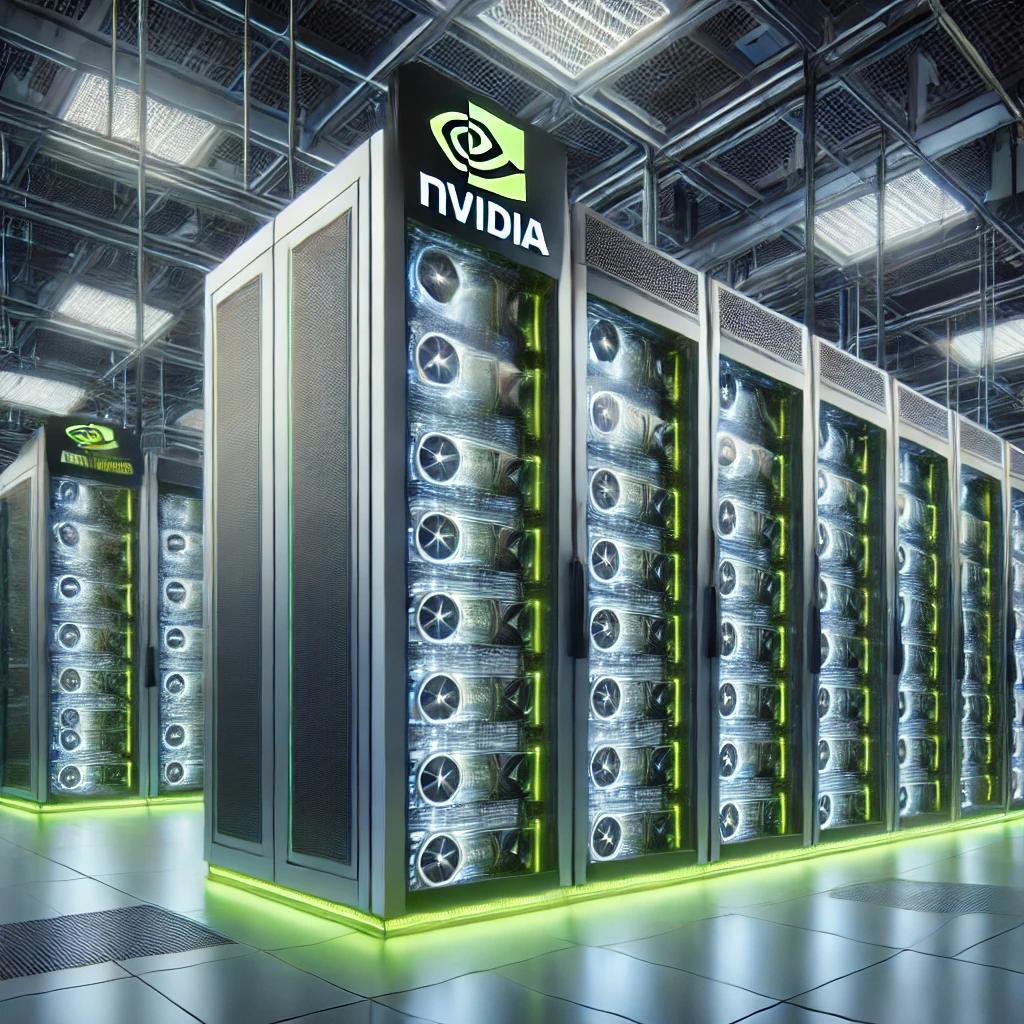

The architectural change in data centers has massively been caused by the integration of NVIDIA GPUs in them. Next-generation data centers are being designed with high-density GPU clusters, capable of efficiently supporting AI workloads to fully exploit the power of these many-core processors.

High-Density GPU Clusters

Modern AI-optimised data centers are filled with enormous arrays of NVIDIA GPUs, organised into dense clusters. It is possible for clusters of this nature to process tremendous amounts of data in parallel and dramatically increase the speed of AI training. The architectural layout for such a data center considers the high power and cooling requirements that the GPU clusters will run into, making sure that they run efficiently.

Cooling and Power Efficiency

The more significant the power, the more intense the heat, in the case of high-density clusters. One of the major challenges was controlling the heat generated from these clusters. Data centers are therefore implementing innovative cooling technology solutions to keep temperatures at bay, such as liquid cooling and precision air conditioning. Efficient power management systems that ensure energy consumption remains within sustainable levels are another critical element.

Airflow Management

These high-performance environments pose the problem of airflow management. Poor airflow can lead to the development of hotspots, considerably reducing the efficiency of cooling systems and, in some cases, resulting in hardware failures. This is where products like EziBlank’s blanking panels are important, as they prevent the recirculation of hot air around the server racks. Such panels optimise airflow, ensuring stable temperatures for the smooth operation of NVIDIA GPUs, even under heavy loads.

Global Expansion of AI Data Centers

All of this demand for AI-optimised data centers is fueling a global expansion, with a handful of tech giants like Google, Microsoft, and Amazon at the forefront. Tech giants are making aggressive investments in the infrastructure that supports the next wave of AI advancement—a high-action investment drama often cosponsored by NVIDIA.

Key Players and Partnerships

NVIDIA has been core to the development of these state-of-the-art data centers through its collaboration with the top cloud providers and AI companies. Such partnerships ensure that newly released GPU technologies are core to operations in AI, ultimately providing a computational backbone for everything from autonomous vehicles to advanced language models.

Strategic Locations

One also notices the geographical spread of these data centers. Many are being built in cool regions of the world to avoid high costs associated with cooling, while some are located near renewable energy sources, all in efforts to minimise their impact on the environment. This strategic placement enhances operational efficiency and aids in attaining broader sustainability goals.

How NVIDIA GPUs are Impacting AI Training Efficiency

The addition of NVIDIA GPUs in the workflow for AI training increased manifold the speed and efficiency of developing AIs.

Accelerated AI Training

NVIDIA GPUs drastically reduce the time it takes to train complex AI models. What used to take weeks now takes days or even hours; with parallel processing, these GPUs power through the process. Acceleration will let AI developers iterate faster, innovating faster and creating more refined models.

Energy and Cost Efficiency

Even at this level of performance, NVIDIA GPUs are engineered to be power-efficient. Their architecture enables more computations per watt compared to traditional CPUs, making them a more cost-effective solution for large-scale AI training. This efficiency not only reduces operational costs but also helps data centers minimise their carbon footprint—something very important in today’s environmentally conscious world.

Challenges and Future Trends in AI Data Centers

Although the revolution in NVIDIA GPUs has just begun in AI data centers, challenges are yet to be overcome and new trends on the horizon.

Infrastructural and Scalability Issues

This infrastructure, however, needs to grow in size with regard to size and complexity of these models. This relates not only to physical space and power capacity but also to the networking infrastructure that keeps all that data moving around. Moreover, the building of data centers is a task which involves scalability in such a way that minimal changes will be required in the future.

Sustainability and Environmental Impact

Large-scale data centers are becoming an environmental concern. In many cases, it can be said that NVIDIA GPUs are more power-efficient compared to several other alternatives. However, the sheer size of AI training makes it resource-intensive. Companies are increasingly looking for ways to mitigate this impact through the use of renewable energy, more efficient cooling systems, and smarter resource management strategies.

Future-Proofing with Advanced Technologies

If data centers are to remain competitive in this rapidly changing environment that artificial intelligence is creating, future-proofing will be required. It would mean making sure that only the latest technologies and best practices are brought on board with regard to cooling, power management, and infrastructure design. For instance, solutions like EziBlank’s blanking panels have become quite important to ensure efficient airflow, keeping cooling efficient, and therefore ensuring that data centers can support high-density GPU clusters facing rising demands from AI.

NVIDIA GPUs are basically at the apex of leading this revolution in AI, powering a new wave of data centers that enable advanced AI applications to become a possibility. The company’s GPUs are radically changing data center architecture, allowing for speedier and more efficient artificial intelligence training while pushing the envelope as far as possible on what can be done in artificial intelligence development. As demand for AI continues to rise, innovative data center solutions will be required to meet the challenges head-on. The future of AI looks very promising, and it is run by an incredible infrastructure that NVIDIA GPUs help build.